On this textual content, we’ll exhibit the steps of recommendations on tips about how one can use a HuggingFace dataset, create embeddings from the dataset, and divide the dataset into two halves (testing and coaching). You’ll furthermore research to retailer your entire created embeddings into the deployed Milvus database by making a gaggle, then carry out a search operation by giving a query quick and producing possibly primarily probably the most comparable choices.

Key Takeaways

- Milvus, an open-source database, is atmosphere pleasant for storing vector embeddings attributable to its indexing decisions like Approximate Nearest Neighbours (ANN) which enable quick and correct outcomes. This makes it helpful for organising advice and question-answering strategies.

- A step-by-step data is obtainable on recommendations on tips about how one can deploy a server on Vultr, organize the required packages, and assemble a question-answering building. This incorporates utilizing a HuggingFace dataset, creating embeddings from the dataset, dividing it into testing and coaching halves, and storing the embeddings in a Milvus database.

- The information additional explains recommendations on tips about how one can tokenize and embed a quick, carry out similarity searches, and generate possibly primarily probably the most related responses. The system can maintain personalised prompts and will alter the variety of questions per quick.

- The question-answering system makes use of the Milvus database and a HuggingFace dataset to carry out similarity searches and uncover the best relevant choices for a given quick. It does this by creating an embedding of the query supplied and calculating tensors.

Deploying a server on Vultr

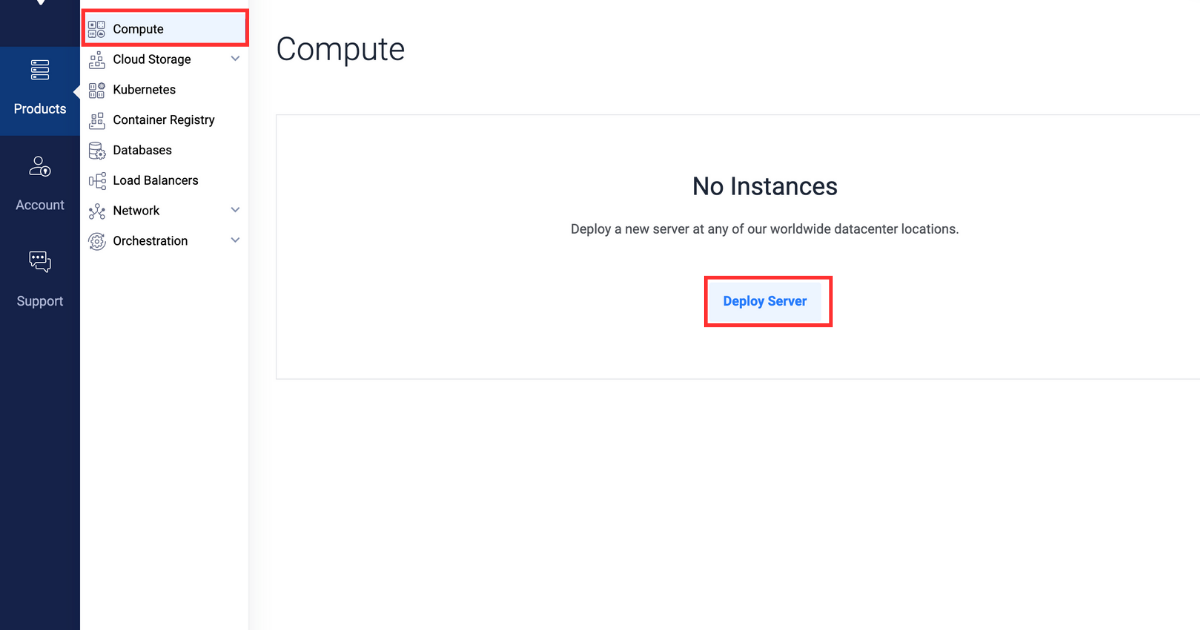

- Be a part of and log in to the Vultr Purchaser Portal.

- Navigate to the Merchandise web net web page.

- From the side menu, choose Compute.

- Click on on on the Deploy Server button all through the coronary coronary heart.

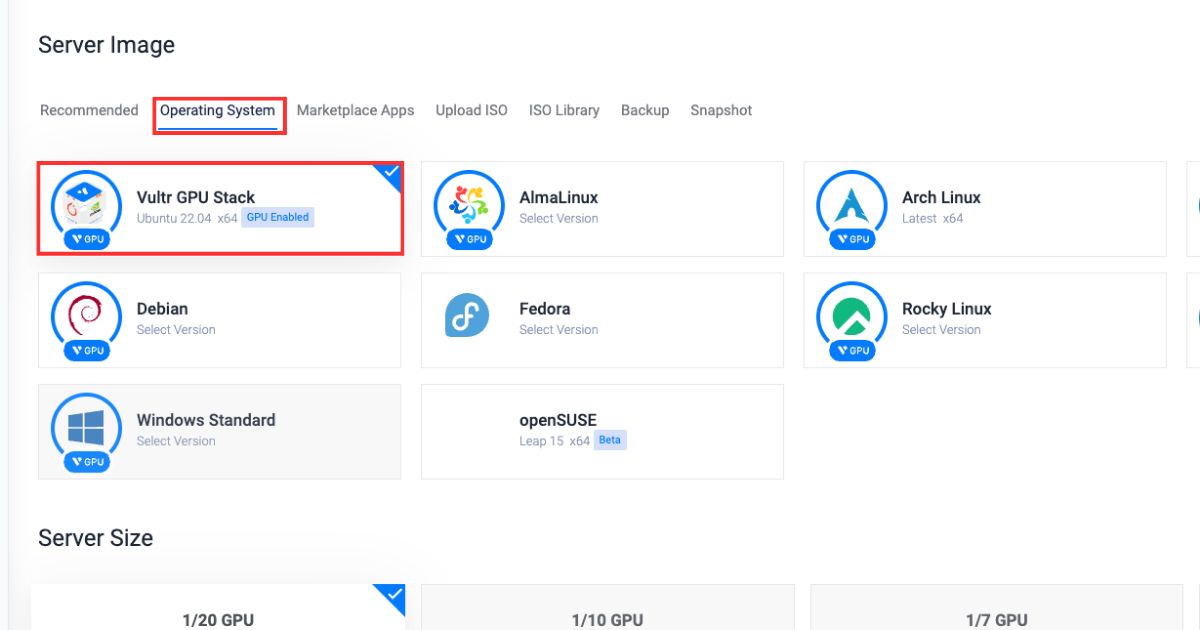

- Choose Cloud GPU on account of the server type.

- Choose A100 on account of the GPU type.

- All through the “Server Location” half, choose the world of your completely different.

- All through the “Working System” half, choose Vultr GPU Stack on account of the working system.

Vultr GPU Stack is designed to streamline the tactic of organising Synthetic Intelligence (AI) and Machine Studying (ML) duties by offering an entire suite of pre-installed software program program program, together with NVIDIA CUDA Toolkit, NVIDIA cuDNN, TensorFlow, PyTorch and so forth.

Vultr GPU Stack is designed to streamline the tactic of organising Synthetic Intelligence (AI) and Machine Studying (ML) duties by offering an entire suite of pre-installed software program program program, together with NVIDIA CUDA Toolkit, NVIDIA cuDNN, TensorFlow, PyTorch and so forth. - All through the “Server Measurement” half, choose the 80 GB probability.

- Choose any further decisions as required all through the “Further Selections” half.

- Click on on on the Deploy Now button on the underside appropriate nook.

- Navigate to the Merchandise web net web page.

- From the side menu, choose Kubernetes.

- Click on on on the Add Cluster button all through the coronary coronary heart.

- Sort in a Cluster Title.

- All through the “Cluster Location” half, choose the world of your completely different.

- Sort in a Label for the cluster pool.

- Improve the Variety of Nodes to five.

- Click on on on the Deploy Now button on the underside appropriate nook.

Getting ready the server

Inserting inside the required packages

After establishing a Vultr server and a Vultr Kubernetes cluster as described earlier, this half will data you thru putting inside the dependency Python packages obligatory for making a Milvus database and importing the compulsory modules all through the Python console.

- Organize required dependencies

pip organize transformers datasets pymilvus torchCorrect proper right here’s what every package deal deal deal represents:

transformers: Supplies entry and permits to work with pre-trained LLM fashions for duties like textual content material materials classification and interval.datasets: Supplies entry and permits to work on ready-to-use datasets for NLP duties.pymilvus: Python shopper for Milvus that enables vector similarity search, storage, and administration of large collections of vectors.torch: Machine discovering out library used for educating and organising deep discovering out fashions.

- Entry the python console

python3 - Import required modules

from pymilvus import connections, FieldSchema, CollectionSchema, DataType, Assortment, utility from datasets import load_dataset_builder, load_dataset, Dataset from transformers import AutoTokenizer, AutoModel from torch import clamp, sumCorrect proper right here’s what every package deal deal deal represents:

pymilvusmodules:connections: Supplies capabilities for managing connections with the Milvus database.FieldSchema: Defines the schema of fields in a Milvus database.CollectionSchema: Defines the schema of the gathering.DataType: Enumerates data varieties that could be utilized in Milvus assortment.Assortment: Supplies the effectivity to work together with Milvus collections to create, insert, and seek for vectors.utility: Supplies the info preprocessing and question optimization capabilities to work with Milvus

datasetsmodules:load_dataset_builder: Heaps and returns dataset object to entry the database information and its metadata.load_dataset: Heaps a dataset from a dataset builder and returns the dataset object for information entry.Dataset: Represents a dataset, offering entry to data-related operations.

transformersmodules:AutoTokenizer: Heaps the pre-trained tokenization fashions for NLP duties.AutoModel: It’s a mannequin loading class for robotically loading the pre-trained fashions for NLP duties.

torchmodules:clamp: Supplies capabilities for element-wise limiting of tensor values.sum: Computes the sum of tensor components alongside specified dimensions.

Establishing a question-answering building

On this half, you’ll research to create a gaggle, insert data into the gathering, and carry out search operations by offering an enter in question-answer format.

- Declare parameters, make certain that to interchange the

EXTERNAL_IP_ADDRESSwith actual worth.DATASET = 'squad' MODEL = 'bert-base-uncased' TOKENIZATION_BATCH_SIZE = 1000 INFERENCE_BATCH_SIZE = 64 INSERT_RATIO = .001 COLLECTION_NAME = 'huggingface_db' DIMENSION = 768 LIMIT = 10 MILVUS_HOST = "EXTERNAL_IP_ADDRESS" MILVUS_PORT = "19530"Correct proper right here’s what every parameter represents:

DATASET: Defines the Huggingface dataset to make the most of for making an attempt choices.MODEL: Defines the transformer to make the most of for creating embeddings.TOKENIZATION_BATCH_SIZE: Determines what number of texts are processed instantly all by means of tokenization, and helps in dashing up tokenization by way of utilizing parallelism.INFERENCE_BATCH_SIZE: Gadgets the batch dimension for predictions, affecting the effectivity of textual content material materials classification duties. You may cut back the batch dimension to 32 or 18 when utilizing a smaller GPU dimension.INSERT_RATIO: Controls the a part of textual content material materials data to be reworked into embeddings managing the quantity of information to be listed for performing vector search.COLLECTION_NAME: Gadgets the title of the gathering you may create.DIMENSION: Gadgets the scale of a person embedding you may retailer all through the assortment.LIMIT: Gadgets the variety of outcomes to hunt for and to be displayed all through the output.MILVUS_HOST: Gadgets the skin IP to entry the deployed Milvus database.MILVUS_PORT: Gadgets the port the place the deployed Milvus database is uncovered.

- Be part of with the skin Milvus database you deployed utilizing the skin IP maintain and port on which Milvus is uncovered. Be sure that to interchange the

explicit individualandpasswordarea values with acceptable values.Must you’re accessing the database for the primary time then theexplicit individual= root andpassword= Kiteconnections.be a part of(host="MILVUS_HOST", port="MILVUS_PORT", explicit individual="USER", password="PASSWORD")

Making a gaggle

On this half, you’ll research to create a gaggle and outline its schema to retailer the content material materials supplies from the dataset appropriately. You’ll furthermore research to create indexes and cargo the gathering.

- Verify assortment existence, if the gathering is current then it’s deleted to steer clear of any conflicts.

if utility.has_collection(COLLECTION_NAME): utility.drop_collection(COLLECTION_NAME) - Create a gaggle named

huggingface_dband outline the gathering schema.fields = [ FieldSchema(name='id', dtype=DataType.INT64, is_primary=True, auto_id=True), FieldSchema(name='original_question', dtype=DataType.VARCHAR, max_length=1000), FieldSchema(name='answer', dtype=DataType.VARCHAR, max_length=1000), FieldSchema(name='original_question_embedding', dtype=DataType.FLOAT_VECTOR, dim=DIMENSION) ] schema = CollectionSchema(fields=fields) assortment = Assortment(title=COLLECTION_NAME, schema=schema)The next are the fields used to stipulate the schema of the gathering:

id: Essential area from which your entire database entries are to be acknowledged.original_question: It’s the world the place the distinctive query is saved from which the query you requested goes to be matched.reply: It’s the world holding the reply to everyoriginal_quesition.original_question_embedding: Incorporates the embeddings for every entry inoriginal_questionto carry out similarity search with the query you gave as enter.

- Create an index for the

original_question_embeddingarea to carry out similarity search.index_params = { 'metric_type':'L2', 'index_type':"IVF_FLAT", 'params':{"nlist":1536} }assortment.create_index(field_name="original_question_embedding", index_params=index_params)Upon the worthwhile index creation of the required area, the beneath output will possibly be displayed:

Standing(code=0, message=) - Load the gathering to make it doable for the gathering is ready to carry out search operation.

assortment.load()

Inserting data all through the assortment

On this half, you’ll research to cut up the dataset into fashions, tokenize your entire questions all through the dataset, create embeddings, and insert them into the gathering.

- Load the dataset, decrease up the dataset into educating and study fashions, and course of the study set to take away every completely different columns other than the reply textual content material materials.

data_dataset = load_dataset(DATASET, decrease up='all') data_dataset = data_dataset.train_test_split(test_size=INSERT_RATIO, seed=42)['test'] data_dataset = data_dataset.map(lambda val: {'reply': val['answers']['text'][0]}, remove_columns=['answers']) - Initialize the tokenizer.

tokenizer = AutoTokenizer.from_pretrained(MODEL) - Outline the perform to tokenize the questions.

def tokenize_question(batch): outcomes = tokenizer(batch['question'], add_special_tokens = True, truncation = True, padding = "max_length", return_attention_mask = True, return_tensors = "pt") batch['input_ids'] = outcomes['input_ids'] batch['token_type_ids'] = outcomes['token_type_ids'] batch['attention_mask'] = outcomes['attention_mask'] return batch - Tokenize every query entry utilizing the

tokenize_questionperform outlined earlier and set the output totorchrelevant format for PyTorch-based Machine Studying fashions.data_dataset = data_dataset.map(tokenize_question, batch_size=TOKENIZATION_BATCH_SIZE, batched=True) data_dataset.set_format('torch', columns=['input_ids', 'token_type_ids', 'attention_mask'], output_all_columns=True) - Load the pre-trained mannequin, switch the tokenized questions, generate the embeddings from the questions, and insert them into the dataset as

question_embeddings.mannequin = AutoModel.from_pretrained(MODEL)def embed(batch): sentence_embs = mannequin( input_ids=batch['input_ids'], token_type_ids=batch['token_type_ids'], attention_mask=batch['attention_mask'] )[0] input_mask_expanded = batch['attention_mask'].unsqueeze(-1).broaden(sentence_embs.dimension()).float() batch['question_embedding'] = sum(sentence_embs * input_mask_expanded, 1) / clamp(input_mask_expanded.sum(1), min=1e-9) return batchdata_dataset = data_dataset.map(embed, remove_columns=['input_ids', 'token_type_ids', 'attention_mask'], batched = True, batch_size=INFERENCE_BATCH_SIZE) - Insert questions into the gathering.

def insert_function(batch): insertable = [ batch['question'], [x[:995] + '...' if len(x) > 999 else x for x in batch['answer']], batch['question_embedding'].tolist() ] assortment.insert(insertable)data_dataset.map(insert_function, batched=True, batch_size=64) assortment.flush()The output will appear to be this:

Dataset({ decisions: ['id', 'title', 'context', 'question', 'answer', 'input_ids', 'token_type_ids', 'attention_mask', 'question_embedding'], num_rows: 99 })

Producing responses

On this half, you’ll research to present a quick, tokenize and embed the quick to carry out similarity search, and generate possibly primarily probably the most related responses.

- Create a quick dataset, you most likely can change the query with any personalised quick and you will even the variety of questions per quick.

questions = {'query':['When was maths invented?']} question_dataset = Dataset.from_dict(questions) - Tokenize and embed the quick.

question_dataset = question_dataset.map(tokenize_question, batched = True, batch_size=TOKENIZATION_BATCH_SIZE)question_dataset.set_format('torch', columns=['input_ids', 'token_type_ids', 'attention_mask'], output_all_columns=True)question_dataset = question_dataset.map(embed, remove_columns=['input_ids', 'token_type_ids', 'attention_mask'], batched = True, batch_size=INFERENCE_BATCH_SIZE) - Outline the

searchperform that performs search operations utilizing the embeddings created earlier. The retrieved information is organized into lists and returned as a dictionary.def search(batch): res = assortment.search(batch['question_embedding'].tolist(), anns_field='original_question_embedding', param = {}, output_fields=['answer', 'original_question'], restrict = LIMIT) overall_id = [] overall_distance = [] overall_answer = [] overall_original_question = [] for hits in res: ids = [] distance = [] reply = [] original_question = [] for hit in hits: ids.append(hit.id) distance.append(hit.distance) reply.append(hit.entity.get('reply')) original_question.append(hit.entity.get('original_question')) overall_id.append(ids) overall_distance.append(distance) overall_answer.append(reply) overall_original_question.append(original_question) return { 'id': overall_id, 'distance': overall_distance, 'reply': overall_answer, 'original_question': overall_original_question } - Carry out the search operation by making use of the sooner outlined

searchperform all through thequestion_dataset.question_dataset = question_dataset.map(search, batched=True, batch_size = 1) for x in question_dataset: print() print('Query:') print(x['question']) print('Reply, Distance, Real Query') for x in zip(x['answer'], x['distance'], x['original_question']): print(x)The output will appear to be this:

Query: When was maths invented? Reply, Distance, Real Query ('till 1870', tensor(33.3018), 'When did the Papal States exist?') ('October 1992', tensor(34.8276), 'When had been free elections held?') ('1787', tensor(36.0596), 'When was the Tower constructed?') ('Poland, Bulgaria, the Czech Republic, Slovakia, Hungary, Albania, former East Germany and Cuba', tensor(38.3254), 'The place was Russian education necessary all through the 20 th century?') ('6,000 years', tensor(41.9444), 'How outdated did biblical faculty college students assume the Earth was?') ('1992', tensor(42.2079), 'In what yr was the Premier League created?') ('1981', tensor(44.7781), "When was ZE's Mutant Disco launched?") ('Medieval Latin', tensor(46.9699), "What was the Latin of Charlemagne's interval later often typically known as?") ('taxation', tensor(49.2372), 'How did Hobson argue to rid the world of imperialism?') ('mild weight, relative unbreakability and low floor noise', tensor(49.5037), "What had been benefits of vinyl all through the 1930's?")All through the above output, the closest 10 choices are printed in a descending order for the query you requested together with the distinctive questions these choices belong to, the output furthermore reveals tensor values with every reply, lots a lot much less tensor worth implies that the reply is further acceptable for the query you requested.

Conclusion

On this textual content, you discovered recommendations on tips about how one can assemble a question-answering system utilizing a HuggingFace dataset and Milvus database. The tutorial guided you thru the steps to create embeddings from a dataset, retailer them right into a gaggle, after which carry out similarity search to hunt out the best relevant choices for the quick by creating the embedding of the query supplied and calculating the tensors.

It is a sponsored article by Vultr. Vultr is the world’s largest privately-held cloud computing platform. A favourite with builders, Vultr has served over 1.5 million prospects all by means of 185 worldwide areas with versatile, scalable, world Cloud Compute, Cloud GPU, Naked Metallic, and Cloud Storage decisions. Be taught further about Vultr.